Diemo Schwarz

Fine Sound Art since 1993CataRT

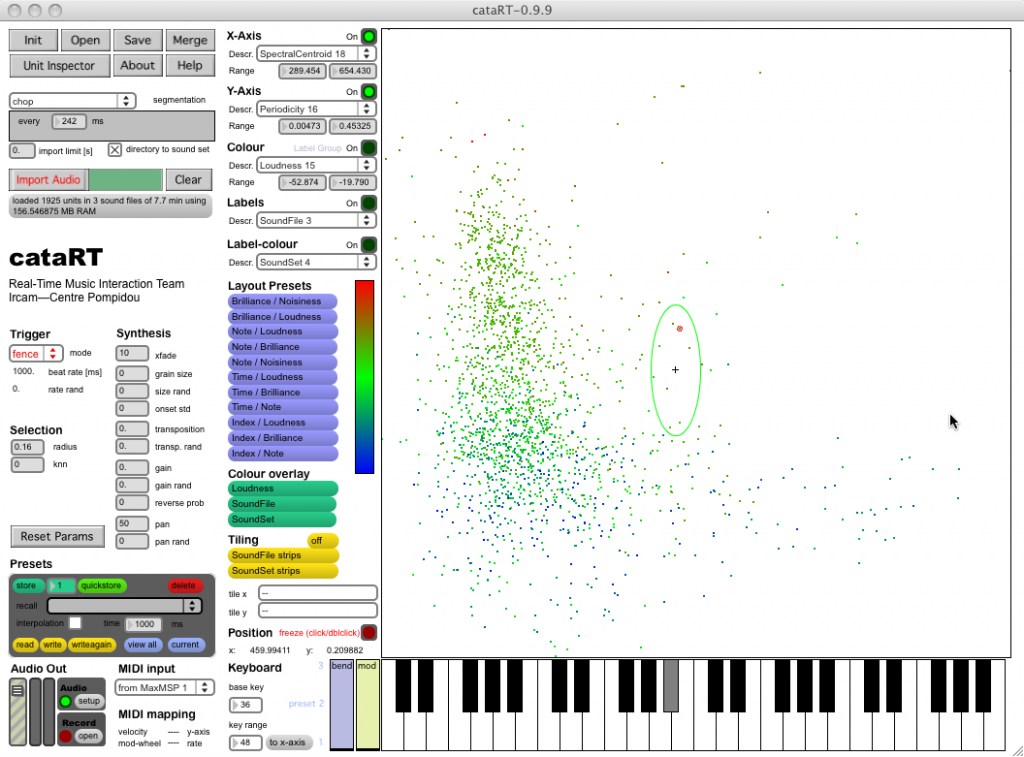

The real-time corpus-based concatenative sound synthesis system CataRT plays grains from a large corpus of segmented and descriptor-analysed sounds according to proximity to a target position in the descriptor space. This can be seen as a content-based extension to granular synthesis providing direct access to specific sound characteristics.

The real-time corpus-based concatenative sound synthesis system CataRT plays grains from a large corpus of segmented and descriptor-analysed sounds according to proximity to a target position in the descriptor space. This can be seen as a content-based extension to granular synthesis providing direct access to specific sound characteristics.

CataRT is implemented in MaxMSP and takes full advantage of the generalised data structures and arbitrary-rate sound processing facilities of the FTM and Gabor libraries. Segmentation and sound descriptors are loaded from text or SDIF files, or analysed on-the-fly.

CataRT allows to explore the corpus interactively or via a target sequencer, to resynthesise an audio file or live input with the source sounds, or to experiment with expressive speech synthesis and gestural control.

CataRT is available from the IRCAM Real-Time Musical Interactions Team’s web site.

Comments are closed

You must be logged in to post a comment.